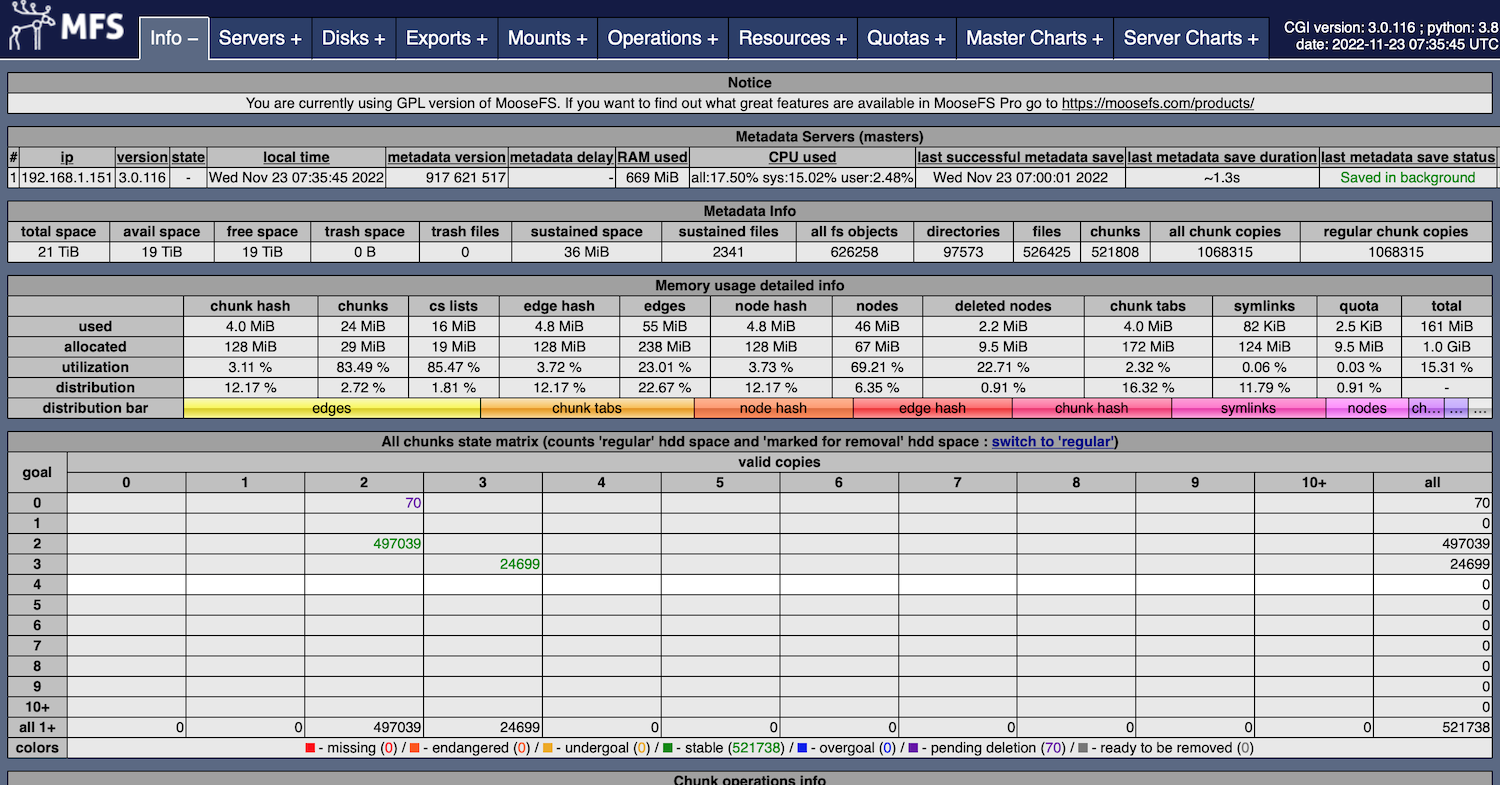

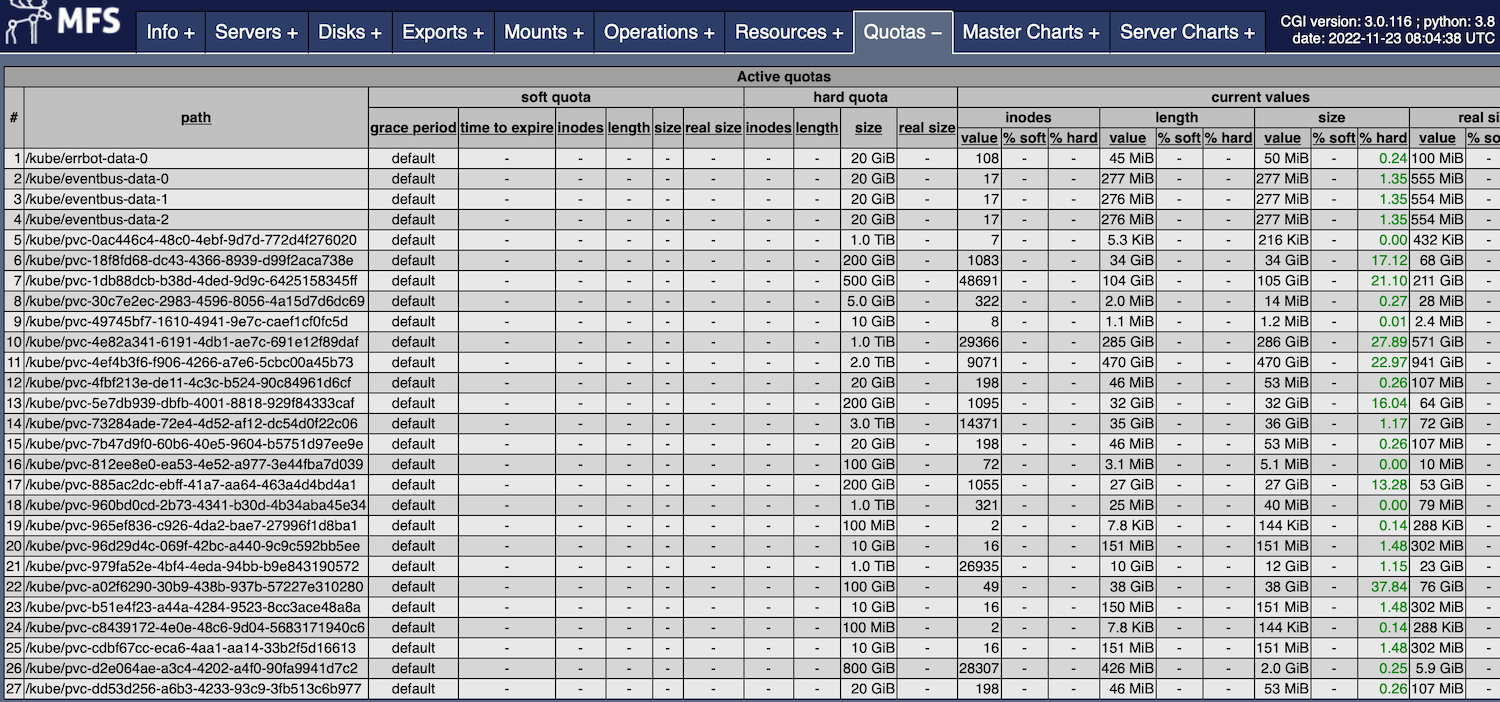

I designed a rudimentary k8s persistentvolumeclaims solution myself, using Moosefs on the backend as a distributed data store. The principle is based on the kubernetes events mechanism, when a create pvc message is received, the backend control application will create the corresponding pv on moosefs and bind the corresponding pvc, in order to facilitate the application to move between nodes, I mount the moosefs file system to each worker node, here a problem arises: how to keep the moosefs master high availability!

There are two official versions of moosefs, one is opensource and the other is Pro, the Pro version comes with HA function, while opensource only has the function of backing up the master, so when there is a failure, the master can only be restored manually. In order to meet the demand of high availability, I applied for a one-month access to the Pro version, and the official price for a permanent license is 1425EUR/10TBi. This price is too expensive for my personal use of the Raspberry Pi. I tested the performance of mfsmaster pro and found no significant improvement compared to the opensource version, so considering that mfsmaster is a single-threaded application, I decided to maintain mfs codes myself in the future, and increase its functionality on kubernetes. Thus, after researching, I found the mfs master high availability solution (shown in the figure above).

MFS Opensource Master HA is divided into three parts.

-

mfsmaster

-

DRBD

-

Keepalived

mfsmaster: stores the metadata of mfs.

DRBD: enables real-time replication of network layer block devices, similar RAID 1.

Keepalived: monitors the health of both mfsmasters.

When mfsmaster A is abnormal, keepalived will migrate VIP to mfsmaster B, and turn A from master to backup, and unmount DRBD USB disk of mfs metadata, and turn B from backup to master, and mount DRBD USB disk of mfs The recovery of the failed node is completed by mounting the DRBD USB disk of metadata and starting mfsmaster.

Before using moosefs, I tested openebs, longhorn, beegfs, glusterfs and other distributed file systems, comparing their performance and ease of use, and finally I chose moosefs, because mfs is too convenient to scale, and the client is accessing the file system through fuse, like macos, freebsd, netbsd can be used, and the read/write performance on the ARM64 platform is also better than several other distributed file systems. The final drawback is that the official mfs v3 version seems to have stopped being maintained (github has not been updated), and the v4 version is still closed source.