reference

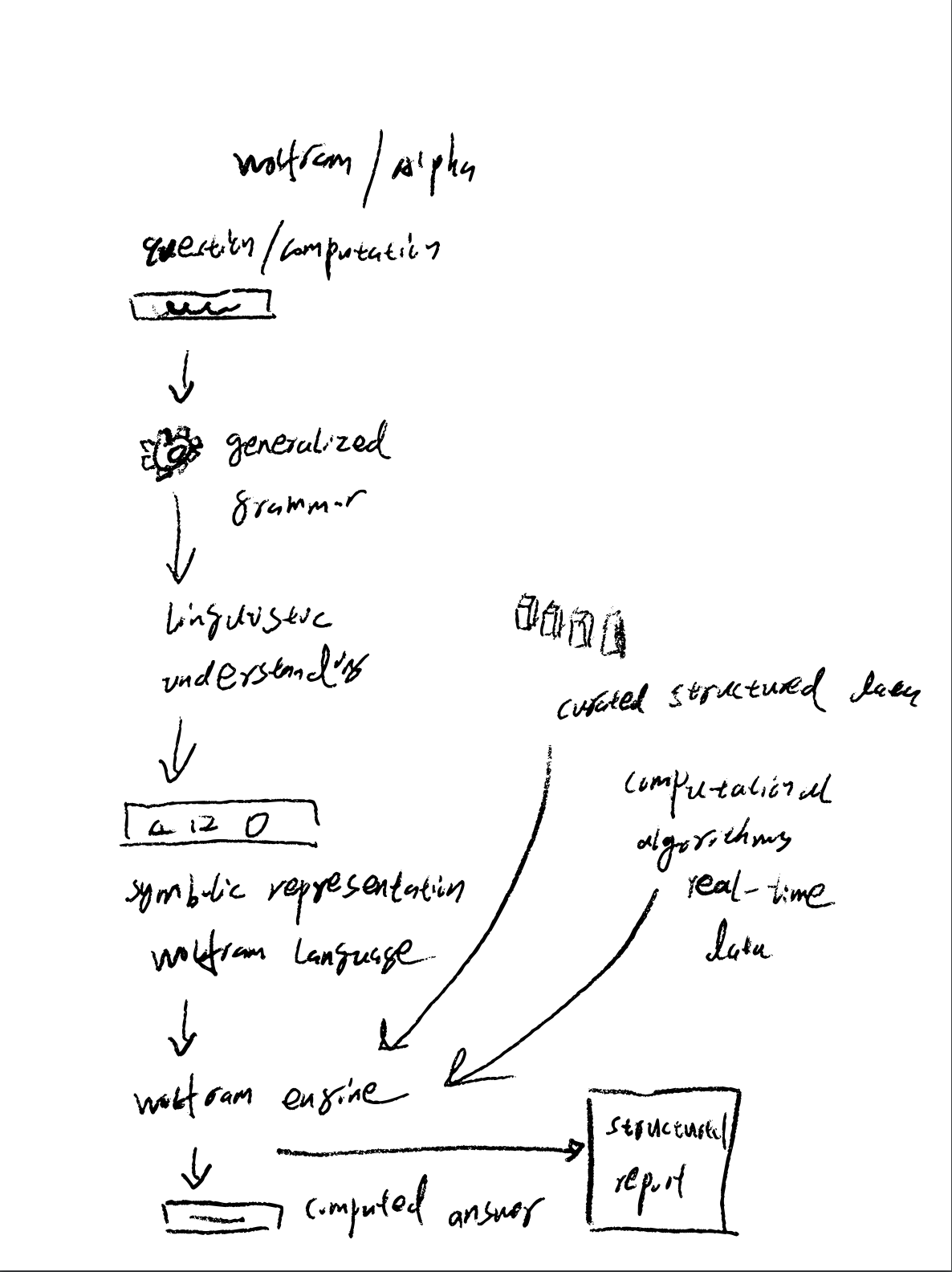

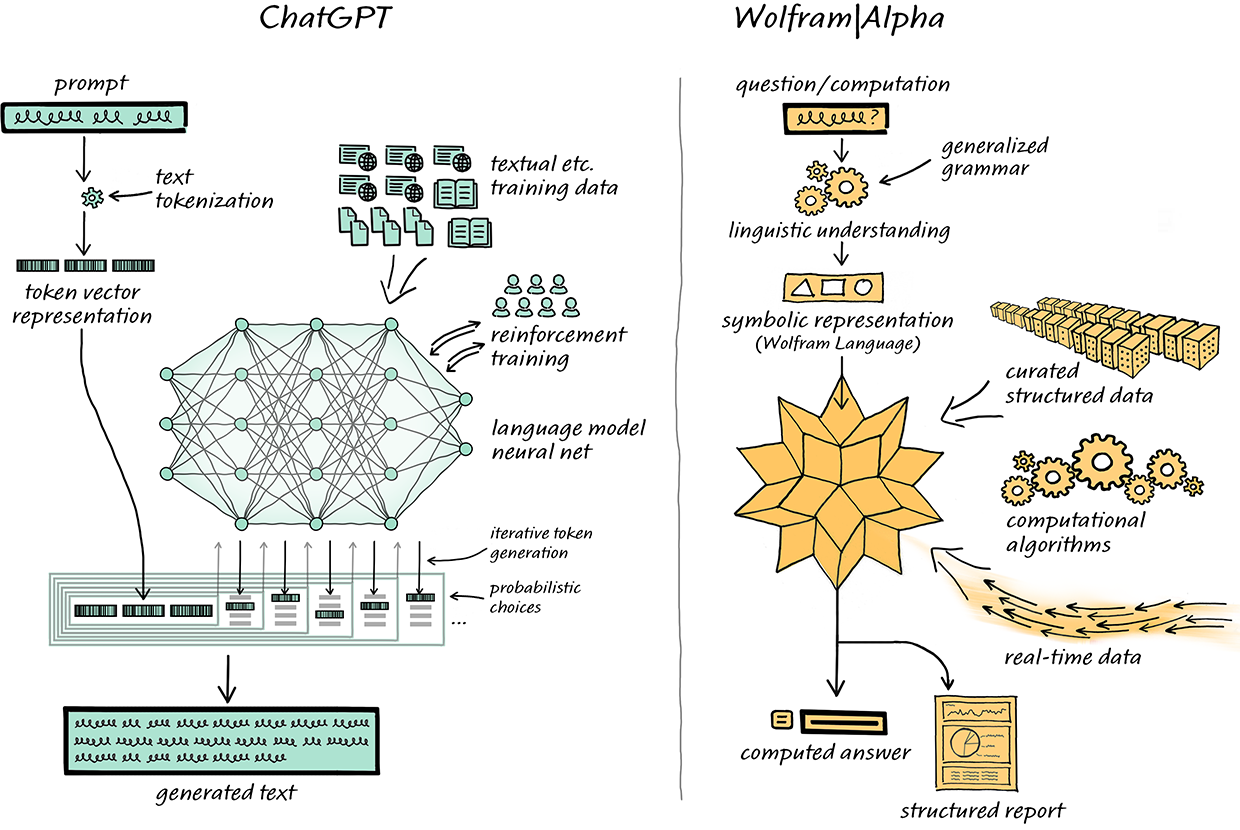

wolframalpha-as-the-way-to-bring-computational-knowledge-superpowers-to-chatgpt

chatgpt-gets-its-wolfram-superpowers

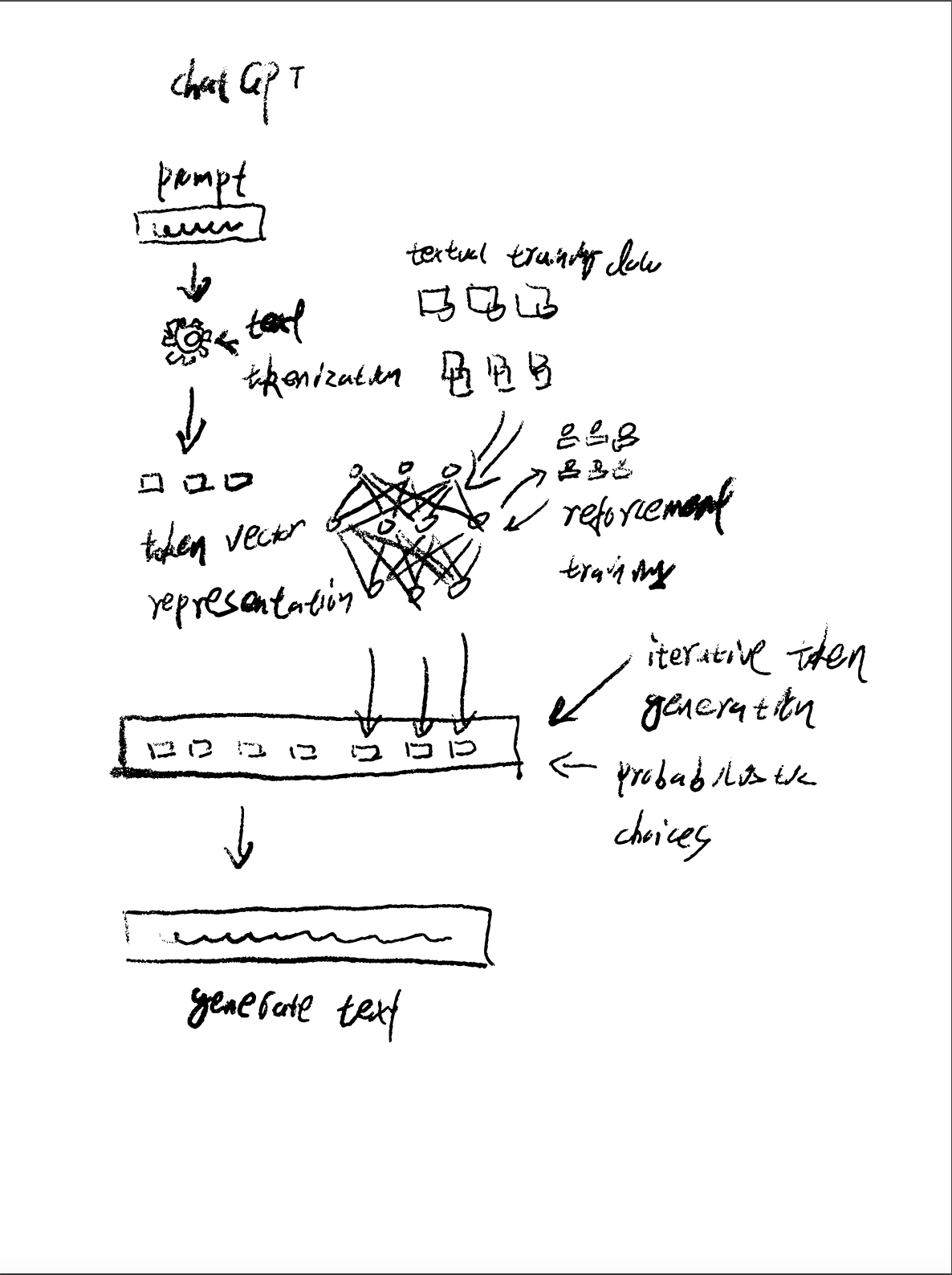

what-is-chatgpt-doing-and-why-does-it-work

Next-Generation Human-Robot Interaction with ChatGPT and ROS

Principle building

The recent hot chatgpt, advancing generative AI to a new level, but currently see most people apply it to chat tools, and even some people use it as Wikipedia, but I think since it is generative, why not use it to do the interaction between human and robot, we use a specific defined natural language to describe the operation we need to let the robot do. chatgpt translates our natural language into a structured language similar to program source code by understanding it, and then inputs it into the robot’s system, and the robot operating system will follow the input structured language to complete the corresponding operation. So I was thinking how to combine RoboMaster EP with LLM language class model to accomplish human-robot interaction. API applications like chatgpt have a disadvantage because their models run in the cloud and are restricted to certain countries and regions, so commercial industrial LLM applications are basically ruled out and I wanted to be able to run the models locally and train and optimize them by myself. At this time Databricks released Dolly v2.0 model, the dataset and the training model are all open source and can be applied to the commercial domain, after reading the related introduction I decided to use dolly v2.0 as my first choice for the local LLM model.

The whole system above is built on ROS2 (Robot Operating System), the Dolly and Whisper models will run on the local server so that we can quickly build and implement the Robot with GPT prototype locally.

Kubernetes + ROS2 + GPT + Whisper

Imagine we have a kubernetes infrastructure in the cloud, and now we want to run a model like chatGPT on a remote robot, the problem is that the robot itself does not have the hardware equipment to run a model like chatGPT, the solution is to run the model in the cloud, and the robot uses chatGPT through a high speed internet network. In the cloud, we use kubernetes to allocate and schedule the resource environment for running the model, such as assigning TPU or GPU resources to the model for neural network forward computing, and the operating system that connects the robot to the cloud is ROS2.0, so that we can control tens of thousands of robots to complete tasks, and they can understand and help humans well.